When a network camera captures an image, the light passes across the lens and impinges on the image sensor. In addition, the image sensor also contains picture elements known as pixels which record the amount of light that falls on them.

These pixels transform the received light into a relevant number of electrons. The intensity of the light is proportional to the number of electrons generated. These electrons are converted into voltage and subsequently transformed into numbers through an A/D-converter. Consequently, the signal created by the numbers is processed through electronic circuits within the camera.

Overview of image sensors:

The original argument a decade ago for the renewal of CMOS image sensors as a competitor to CCD technology was generally based on several ideas:

1- Lithography and operation control in CMOS fabrication had reached tiers that soon would allow CMOS sensor image quality to rival CCDs.

2 – Integration of companion functions on the same die as the image sensor, creating camera-on-a-chip or SoC (system-on-a-chip) capabilities.

3 – Reduced power consumption.

4 – Decreased imaging system size because of integration and reduced power consumption.

5 – Using the identical CMOS production lines as mainstream logic and memory device fabrication, delivering economies of scale for CMOS imager manufacturing.

Currently, two key technologies are prevalent for the image sensor in a camera. They are CMOS (Complementary Metal-oxide Semiconductor) and CCD (Charge-coupled Device). The following sections explain their design and varied strengths and weaknesses.

| Initial Prediction for CMOS | Twist | Outcome CMOS vs. CCD |

| Equivalence to CCD in image performance | Required much greater process adaptation and deeper submicron lithography than initially thought | High performance is available in both technologies today, but with higher development costs in most CMOS than CCD technologies. |

| On-chip circuit integration | Longer development cycles, increased cost, trade-offs with noise, flexibility during operation | Greater integration in CMOS than CCD, but companion ICs still often required with both |

| Economies of scale from using mainstream logic and memory foundries | Extensive process development and optimization required | Legacy logic and memory production lines are commonly used for CMOS imager production today, but with highly adapted processes akin to CCD fabrication |

| Reduced power consumption | Steady progress for CCDs diminished the margin of improvement for CMOS | CMOS ahead of CCDs |

| Reduced imaging subsystem size | Optics, companion chips, and packaging are often the dominant factors in imaging subsystem size. | Comparable |

Color filtering:

Image sensors record the amount of light from the bright to the dark region with no color information. Because CCD and CMOS image sensors are ‘color blind,’ a filter at the front of the sensor enables the sensor to allocate color tones to every pixel.

In this context, two widespread color registration techniques are CMYG (Cyan, Magenta, Yellow, and Green) and RGB (Red, Green, and Blue). Red, green, and blue are the primary colors, which, when combined in varied combinations, can generate the majority of the colors visible to the human eye.

The Bayer array represents the alternating rows of green-blue and red-green filters. It is the ubiquitous RGB color filter. Because the human eye is more sensitive to green color compared to the other two colors, the Bayer array features twice as many green color filters. It implies that when using the Bayer array, the human eye can sense more detail than when all the three colors were used in identical measures in the filter.

An alternate method to filter or record color is to use complementary colors, i.e., cyan, magenta, and yellow. Frequently, the complementary color filters on sensors are merged with green filters to create a CMYG color array. Generally, the CMYG system provides higher pixel signals owing to its broader spectral bandpass.

But, the signals should be subsequently transformed into RGB because this is used in the final image. The conversion indicates added noise and more processing. The outcome is that the initial gain in the signal-to-noise is decreased. Note that the CMYG system is not so efficient at accurately presenting colors.

Usually, the CMYG color array is used in interlaced CCD image sensors. On the other hand, the RGB system is prominently used in progressive scan image sensors.

CCD technology:

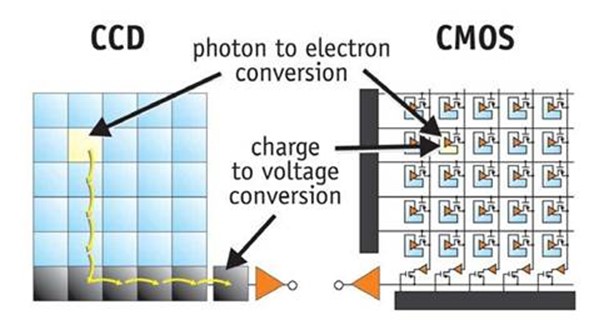

In a CCD sensor, the light impinges on the sensor’s pixels and is conveyed from the chip via one output node or merely a few output nodes. These charges are then transformed into voltage levels, buffered, and delivered as an analog signal. The particular signal is finally amplified and converted to numbers through an A/D-converter exterior to the sensor.

Specifically, the CCD technology was developed to be used in cameras. For over 30 years, the CCD sensors have been utilized. Conventionally, these sensors offered some benefits compared to the CMOS sensors, including less noise and improved light sensitivity. However, these differences have vanished in recent years.

The limitations of CCD sensors are that analog components need more electronic circuitry exterior to the sensor. Also, their production is costly and can consume up to 100 times more power than CMOS sensors. Due to the increased power consumption, there can be heat concerns in the camera. This not only influences image quality negatively but also raises the cost and environmental effect of the product.

Also, CCD sensors need a higher data rate because everything has to pass through only one output amplifier or some output amplifiers.

CMOS technology:

In the beginning, ordinary CMOS chips were deployed for imaging purposes. But the output showed poor image quality because of their inferior light sensitivity. The contemporary CMOS sensors implement a more dedicated technology. Moreover, the light sensitivity and quality of the sensors have quickly augmented in recent years.

CMOS chips provide various advantages. Contrasting the CCD sensor, the CMOS chip includes A/D-converters and amplifiers, reducing the camera expense because it comprises all the logic required to generate an image. All CMOS pixel comprises conversion electronics.

Compared to CCD sensors, CMOS sensors feature better integration possibilities and more functionality. But, this inclusion of circuitry within the chip poses a risk of more structured noise like stripes and other patterns. Moreover, CMOS sensors come with higher noise immunity, lower power consumption, a faster readout, and a smaller system size.

Calibrating a CMOS sensor in production (if needed) can be more challenging than calibrating a CCD sensor. However, technological advancement has made CMOS sensors easier to calibrate. Some of them are currently self-calibrating too.

It is allowed to read individual pixels from a CMOS sensor. This enables ‘windowing,’ which suggests that it is possible to read out the parts of the sensor area rather than the whole sensor area at once.

Consequently, a higher frame rate can be conveyed from a restricted part of the sensor; digital PTZ (pan/tilt/zoom) functionalities can also be used. With a CMOS sensor, it is also possible to obtain multi-view streaming that enables various cropped view areas to be simultaneously streamed from the sensor, ultimately simulating some ‘virtual cameras.’

HDTV and megapixel sensors:

HDTV and Megapixel technology allows network cameras to deliver higher resolution video images compared to analog CCTV cameras. This aspect implies that they expand the possibility of observing details and recognizing objects and people. This is a major consideration in video surveillance applications.

An HDTV or megapixel network camera provides a minimum of twice as high a resolution as a conventional, analog CCTV camera. Megapixel sensors are fundamental components in HDTV, megapixel, and multi-megapixel cameras. They can be used to present very detailed images as well as multi-view streaming.

Megapixel CMOS sensors are extensively available and usually less expensive compared to megapixel CCD sensors. However, there are myriad examples of costly CMOS sensors.

It is tough to create a fast-megapixel CCD sensor. It is a disadvantage and increases the complexity of developing a multi-megapixel camera through CCD technology.

Many megapixel camera sensors are usually identical in size to the VGA sensors, with a resolution of 640×480 pixels. A megapixel sensor includes more pixels than a VGA sensor. Therefore, the size of every pixel in a megapixel sensor converts smaller than that in a VGA sensor.

Consequently, a megapixel sensor is usually less light sensitive per pixel than a VGA sensor. The reason is the pixel size is smaller, and the light reflected from an object spreads to more pixels. But, this technology is swiftly enhancing megapixel sensors. Furthermore, the performance in context to light sensitivity is continuously improving.

Key differences:

A CMOS sensor includes A/D-converters, amplifiers, and circuitry for extra processing. On the other hand, in a camera equipped with a CCD sensor, several signal processing functions are carried out exterior to the sensor.

A CMOS sensor permits multi-view streaming and windowing, which can’t be accomplished with a CCD sensor. Generally, a CCD sensor has one charge-to-voltage converter in each sensor. On the other hand, a CMOS sensor has one charge-to-voltage converter in each pixel. Due to the faster readout, a CMOS sensor is more suitable for use in multi-megapixel cameras.

The latest technology advancements have eliminated the difference in light sensitivity between a CMOS and CCD sensor at a specified price point.

Detailed Comparison of CCD and CMOS sensors:

i. System Integration:

Being an old technology with a CCD sensor, it is impossible to integrate peripheral components like ADC and timers into the primary sensor. Hence, additional circuitry is required, increasing the CCD sensors’ overall size. Furthermore, specialized fabrication techniques are used in making CCD sensors, so it is expensive technology.

The camera can be incorporated into the chip or system in CMOS sensors. Hence, the CMOS sensors are very compact.

ii. Power Consumption:

CCD sensors feature higher power consumption compared to CMOS sensors due to the capacitive architecture. Various types of power supplies are required for the varied timing clocks. The typical voltage for the CCD sensors falls in the range of 7 V to 10 V.

CMOS sensors offer low power consumption compared to CCD sensors because it needs a single power supply. The range of typical voltage is usually 3.3 V to 5 V. So, for the application wherein power consumption is the key criterion, the CMOS sensor is preferable to the CCD sensor.

Since CMOS sensors feature a lower power consumption compared to CCD image sensors, the temperature within the camera can be maintained lower. Moreover, heat issues with CCD sensors can enhance interference. On the other hand, CMOS sensors can have higher structured noise.

iii. Processing Speed:

CCD sensor always needs to read out the entire image, resulting in less processing speed. It is possible to increase it using the multiple shift registers. However, this will demand additional hardware.

In the CMOS sensor, the readout for the particular area of an image is possible; therefore, its speed is higher than the CCD sensor. This speed can be further increased by utilizing the multiple column select lines. Note that the dynamic range of the CCD sensor is considerably higher than the CMOS sensor.

iv. Image distortion:

A blooming effect is visible when a CCD sensor is exposed for an extended period. With the anti-blooming technique, this blooming effect can be reduced.

In a CCD sensor, all the pixels are exposed at once. Hence, if you intend to eliminate the rolling shutter effect, all the pixels must be exposed at the same time. This is called the global shutter effect. So, these days, the CMOS sensors are equipped with global sensors. Since the entire frame is captured at once, there are no wobble, skew, smear, or partial exposure effects.

The most common kind of distortion in CMOS sensors is the rolling shutter. This is because, in the CMOS sensor, the pixels are read line by line. Hence, whenever any quickly moving object gets captured by this CMOS sensor, the rolling shutter effect becomes significantly noticeable.

All portions of a frame are not captured at a time but separately. Subsequently, all parts are showcased at once. As a result, it may add a time lag in frames. So, the images may wobble or undergo a skew effect. But, the high-end CMOS cameras contain more efficient sensors.

v. Noise and Sensitivity:

CMOS sensors have more noise owing to the higher dark currents. The reason is the charge to voltage converter circuit and amplification circuit is incorporated into the same pixel. Hence, the overall fill factor of the CMOS sensor is lesser than the CCD sensor. Consequently, the sensitivity of the CMOS sensor will be lesser than that of the CCD sensor.

In the CMOS sensor, the amplifiers used in every pixel are different. Due to that, we will notice the non-uniform amplification, which would behave as additional noise. However, the technology of this CMOS sensor has progressed so much that the sensitivity and noise of this CMOS sensor are identical to the CCD sensor.

vi. Construction:

CCD chips register the pixels (whenever light strikes) on the chip and subsequently send these pixels one by one. Hence, the time required for transmitting the pixels for one image increases. Because of continuous fetching and sending activities, the chip consumes a lot of power.

In CMOS sensor chips, the sensors themselves have plenty of inbuilt circuitry. This allows reading the pixels at the photo sensor level itself. The detailed data is sent all at once. Hence, there is no time lag, and less energy will be consumed due to this activity.

vii. Vertical Streaking:

When the CCD sensor-based cameras are used in video or live mode, they demonstrate vertical streaking. In these images, a bright vertical line is formed. Because plenty of analog sensors exist in a row, the current that overflows one of the sensors will leak to the entire row. Hence, it creates a vertical line. But, in other modes, CCD sensors don’t exhibit such characteristics.

There are no such concerns in CMOS sensors because every circuit is completely isolated from the remaining circuits on the chip.

viii. Image quality:

CCD sensors boast lower noise levels since their layout enables more pixels to be recorded over their surface. Therefore, the colors of the captured images are more vibrant. As a result, it enhances the image quality.

Conversely, due to their layout, CMOS sensors can’t accommodate more pixels over their surface. Hence, images will have low resolution, which negatively influences image quality.

Because the CCD technology is more advanced than the CMOS technology, the image quality is better. But the drawback of the technology is its higher power consumption and streaking issues.

ix. Application:

CCD sensors are widely used in DSLR cameras. Conversely, due to lower cost and longer battery life, CMOS sensors are extensively used in mobile phones, tablets, digital cameras, etc.

CCD vs CMOS Sensor:

| Parameters | CCD Sensor | CMOS Sensor |

| Resolution | Up to 100+ MP Sensor elements’ size restricts resolution | More than 100 MP supported |

| Frame rate | Best for lower frame rates | Best for higher frame rates |

| Color depth | Higher (16+ bits is standard for expensive CCDs) | Lower (12-16 bits is standard) |

| Responsivity and linearity | Lower responsivity, wider linear range | Higher responsivity, lower linear range (will saturate early) |

| Limit of detection | Low (more sensitive at low intensity) | High (less sensitive at low intensity) |

| Noise figure | Lower noise floor leads to higher image quality | Higher noise floor leads to lower image quality |

Conclusion:

CCD and CMOS sensors feature unique benefits. Both these technologies are rapidly evolving. The best suitable strategy for a camera manufacturer is to assess and test sensors for every camera being developed constantly. Subsequently, whether a selected sensor is based on CMOS or CCD becomes irrelevant. So, the only focus is the sensor that can be utilized to build a network camera that conveys the expected image quality and meets the customers’ video surveillance needs.

You can use a CCD sensor to benefit from better image quality and lower cost. You can use a CMOS sensor to help from faster readout speed, low power consumption, lower noise, longer battery life, and no time lag.